Amplification: apparent effect

January 25, 2025

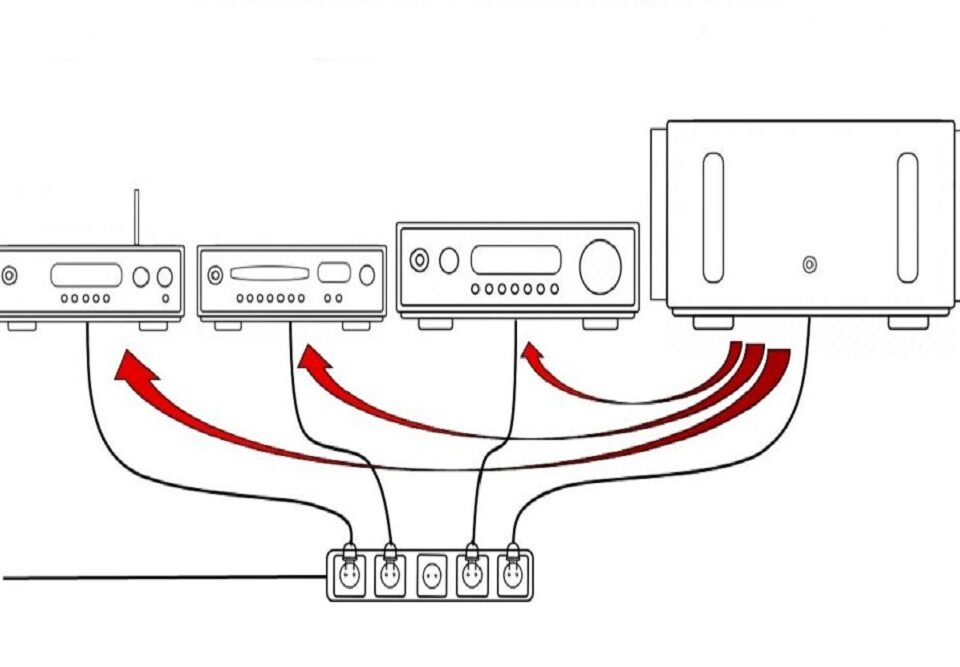

The complexity of high fidelity cables

January 25, 2025ANALOG and DIGITAL, the eternal challenge

We are deeply analogue beings, the world itself is analogue, the music we listen to has been created and reproduced analogue since its inception, and to a large extent still is.

Sounds, understood as sound waves, are born analogue and reach our ears analogue

What possibly changes along the way is how these sounds, once generated, are recorded, recorded, transported and processed.

Whereas until a few decades ago only the analogue method existed, today it is likely that part of our reproduction system is partly digital, as is the case with CD players and so-called ‘liquid’ music, for example.

Without entering into a discussion on which of these two methods is the best, we would like to focus on what we consider to be the biggest and most significant difference between them, the one that radically distinguishes them as it relates to the very roots of their functioning.

We could say in summary form that analogue shows its upper limit more or less progressively. For example, the distortion of a loudspeaker increases as the excursion increases, just as a power amplifier begins to manifest its sizing limits as it approaches its maximum power. Both express a natural ‘sense of fatigue’, similar to what we experience in a thousand everyday situations: for example, we get tired much faster when running than when walking.

We could therefore generally define the quality of analogue as inversely proportional to the amplitude of what it has to reproduce.

In digital, the opposite happens: quality is directly proportional to the amplitude of the signal it represents, consisting of the number of bits used for its encoding. We have in fact that a signal with maximum amplitude uses all the available bits (e.g. 16 in the Red Book standard of Audio CDs), while weaker signals can use a smaller quantity of bits, up to their suppression when their level is less than a single bit (the experts will forgive me for this deliberately simplified representation in order to make it more comprehensible and usable for everyone).

What are the consequences of this profoundly different behaviour of these two approaches?

To answer this, we have to consider that, in music recording, certain types of information are usually at a very low level, but are fundamental for correct reproduction. In fact, we find in this range many of the upper harmonics of the instruments, which characterise their timbre, and most of the spatial micro-information that defines the environment in which the instruments were recorded.

It is clear that the loss of this information impairs the correct reproduction of the original timbre and its spatial localisation within the recreated acoustic scene.

Both systems are however limited: at the bottom by the noise floor, at the top by the maximum manageable value (number of bits, maximum linear excursion, etc.). These limits define the maximum dynamic excursion of each system.

So which system do you prefer?

There is no definitive answer, except that it ‘depends’ on many factors, including the complexity of the system you want to equip yourself with, the cost you intend to incur, the quality you want to achieve, and what you are willing to give up.

Everyone will therefore find the answer that is most correct for them.